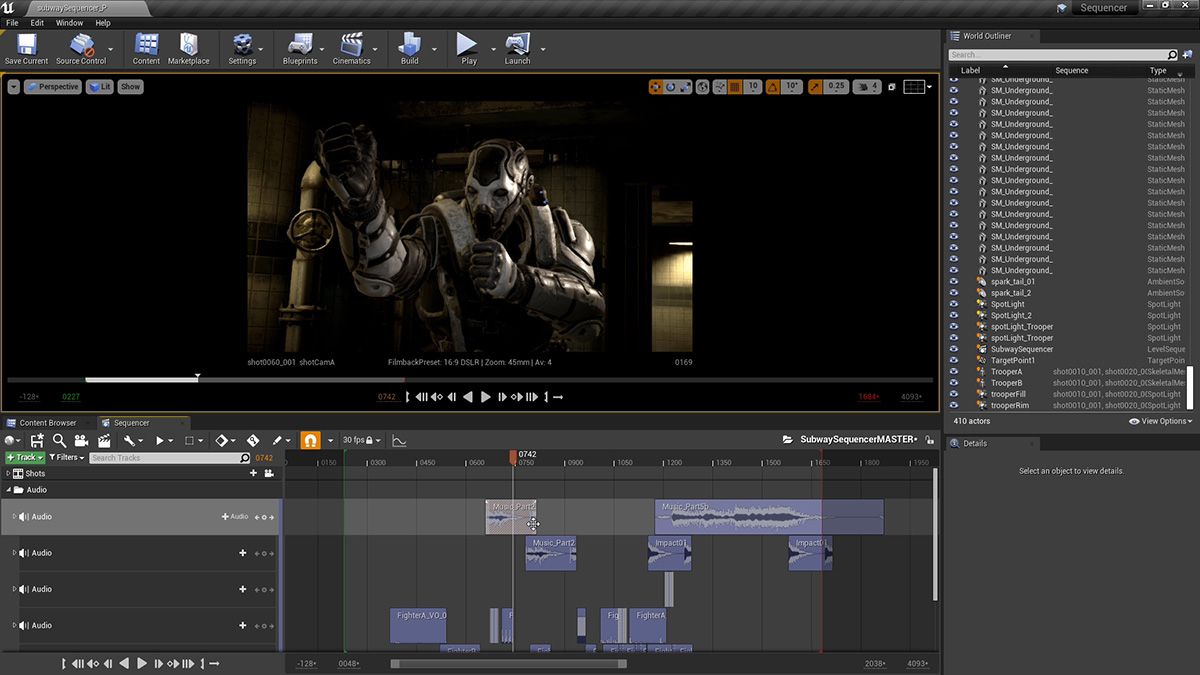

Here you apply the soundtrack used during motion capture to your sequence so that dialog and sound effects are sync’d to one another. As it did during the capture session, this soundtrack helps drive the scene. Here too, as other elements are visualized in-engine, more sound effects can be added – each one serving to inform the filmmaker where the camera should be pointing and when.

- Categories:

- Training